One of the most complex aspects of music is the way a composer organises and relates his or her musical ideas within a composition. Different musical ideas are in general presented to provide contrast and astonish the listener, whereas others are repeated at different times or even varied in order to create a sense of familiarity. Not only these musical patterns are closely inter-related, but they can also be decomposed into progressively shorter ideas in light of their hierarchical organisation. Human perception of musical structure is supposed to depend on the generation of hierarchies, which is inherently related to the actual organisation of sounds in music and critical for the appreciation of music. In order to better underestand these processes we are creating algorithms for the automatic detection of musical structure in audio recordings.

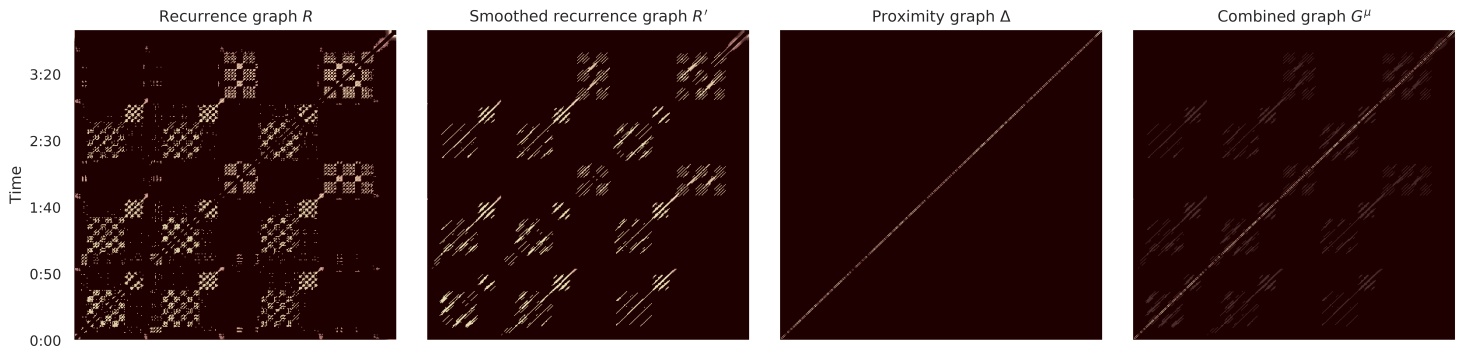

Figure 1. Music graphs extracted from SALAMI 676. The recurrence graph captures the similarities between the harmonic features (chroma features) extracted from the track, whereas the proximity graph detects similarity between timbral features (mel-frequency cepstral coefficients). After a filtration process of the former one, these graphs are combined in a single graph (the rightmost).

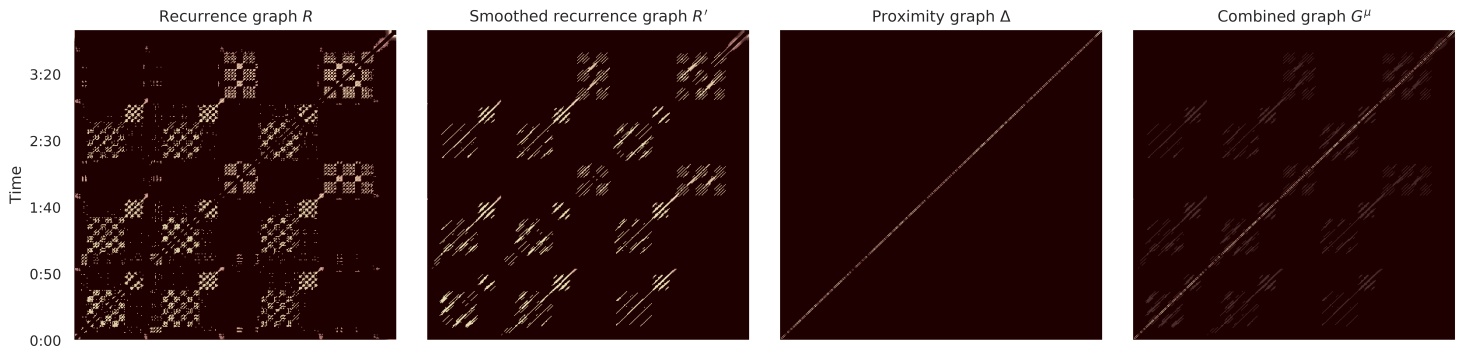

Figure 2. Hierarchical segmentation of "SALAMI 676" with colours identifying communities. The innermost circle corresponds to the first segmentation level in the hierarchy, where all nodes belong to the same community, i.e. the starting point for the community detection algorithm; conversely, every node in the outmost circle forms a community per se. This plot is thus helpful to visualise the structure of the detected communities by illustrating how they progressively break into smaller ones.

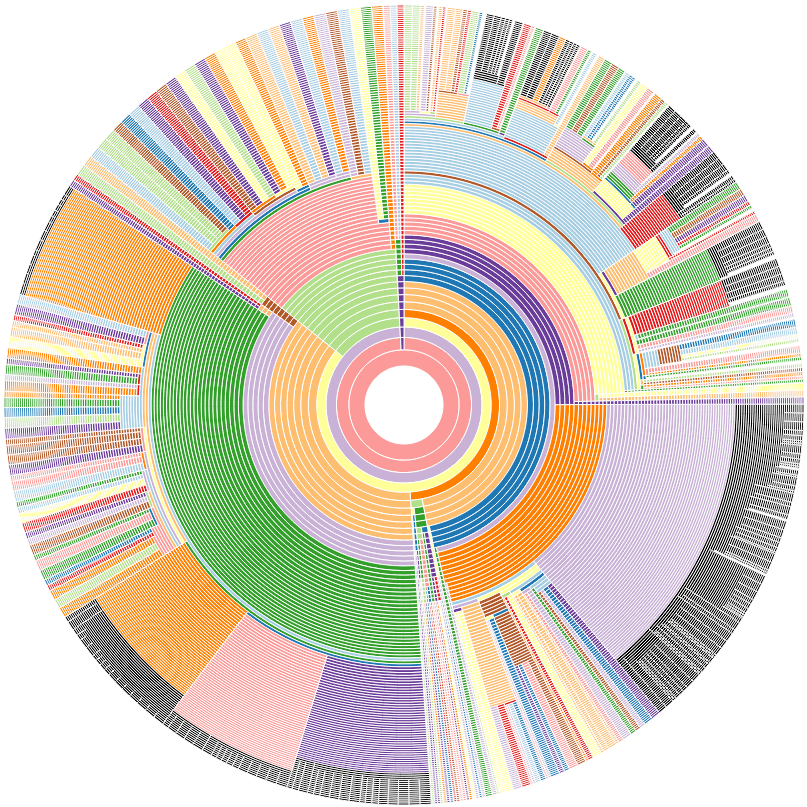

Figure 3. The combined graph obtained from SALAMI 676 (the adjacency matrix plotted in Figure 1) with nodes coloured according to the segmentation of the network at the 18th level (also plotted in Figure 4 and compared with human structural annotations). Considering the inheritance from the proximity graph, nodes are connected as a chain to reflect the temporal dimension of music whereas edges connecting non-subsequent nodes denote the harmonic and melodic similarity among them.

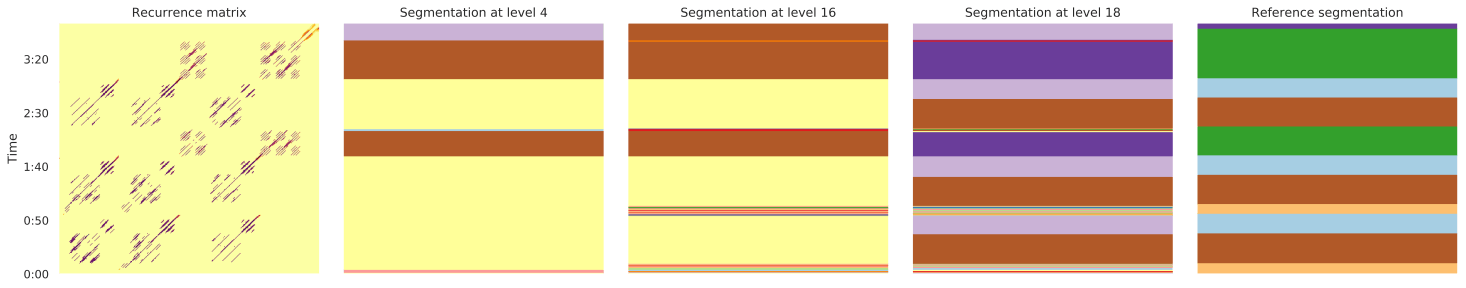

Figure 4. Comparison of the upper-level human segmentation for "SALAMI 676" (the rightmost) with the estimated segmentation at levels 4, 16, 18 in the hierarchy produced by MSCOM. Whereas the structural segmentation at the 18th level is at the same granularity of the upper-level reference annotations, the segmentations at levels 4 and 16 managed to uncover superstructures.

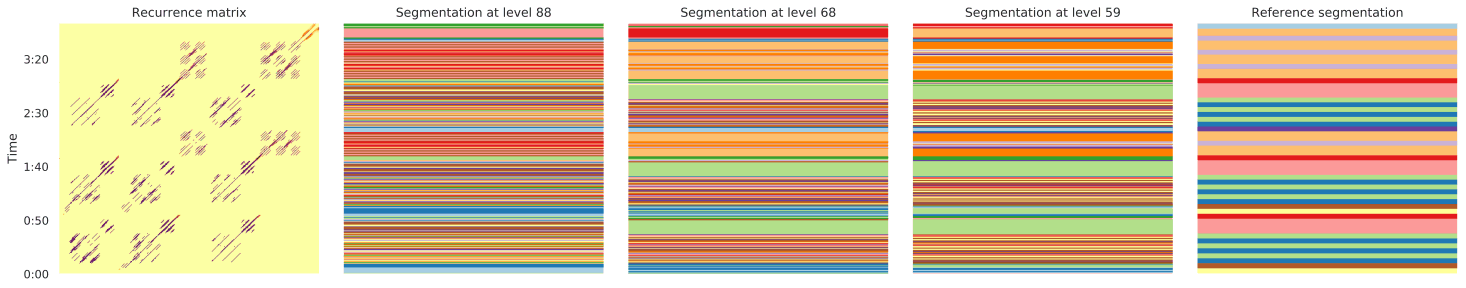

Figure 5. Comparison of the lower-level human segmentation for "SALAMI 676" (the rightmost) with the estimated segmentations at levels 88, 68, 59 in the hierarchy produced by MSCOM. Analogously to the previous figure, whereas the 59th segmentation level has the best alignment with the lower-level reference annotation, the segmentations at levels 68 and 88 enables the discovery of more granular musical structures, providing a deeper analysis of the structure of the piece.

Jacopo de Berardinis

Ph.D candidate

Website was built with Mobirise